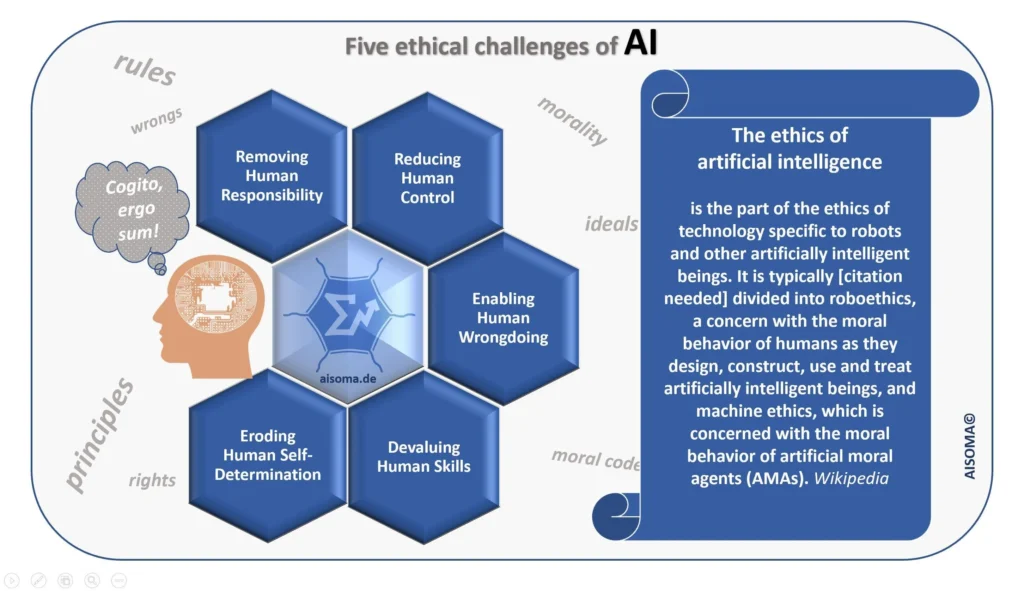

As artificial intelligence (AI) continues to evolve and integrate into various aspects of our lives, the ethical challenges of artificial intelligence have become increasingly prominent. From algorithmic bias to privacy concerns, the implications of AI technologies raise critical questions about fairness, accountability, and transparency. Understanding these ethical dilemmas is essential for navigating the complex landscape of modern technology and ensuring that AI serves humanity positively.

In this article, we will delve into the multifaceted ethical challenges posed by artificial intelligence. Readers will discover how biases in AI algorithms can perpetuate discrimination, affecting marginalized communities and influencing decision-making processes in significant ways. We will also explore the importance of data privacy and the potential risks associated with the collection and use of personal information by AI systems.

Furthermore, we will discuss the accountability of AI systems and the challenges in attributing responsibility when things go wrong. As we navigate these pressing issues, we invite you to join us in examining the ethical frameworks that can guide the development and deployment of AI technologies. Stay with us as we uncover the vital conversations surrounding the ethical challenges of artificial intelligence and their implications for our future.

Bias in AI Algorithms

Bias in artificial intelligence algorithms is a significant ethical challenge that can lead to unfair treatment of individuals based on race, gender, or socioeconomic status. AI systems learn from historical data, which may contain biases that are then perpetuated in their decision-making processes. For instance, facial recognition technology has been shown to misidentify individuals from minority groups at higher rates than their white counterparts, raising concerns about discrimination and inequality.

Addressing bias in AI requires a multi-faceted approach, including diversifying training datasets and implementing fairness-aware algorithms. Organizations must prioritize ethical considerations in AI development to ensure that these technologies serve all segments of society equitably. This challenge highlights the importance of transparency and accountability in AI systems.

Privacy Concerns

As AI technologies become more integrated into daily life, privacy concerns have escalated. AI systems often require vast amounts of personal data to function effectively, leading to potential misuse or unauthorized access to sensitive information. The collection and storage of personal data raise ethical questions about consent and the right to privacy.

To mitigate privacy risks, organizations must adopt robust data protection measures and comply with regulations such as the General Data Protection Regulation (GDPR). Implementing privacy-by-design principles can help ensure that user privacy is prioritized throughout the AI development process, fostering trust between users and technology providers.

Accountability and Responsibility

Determining accountability in AI systems poses a significant ethical dilemma. When an AI system makes a mistake, such as causing harm or making an incorrect decision, it can be challenging to identify who is responsible—the developers, the users, or the AI itself. This ambiguity complicates legal and ethical frameworks surrounding AI deployment.

Establishing clear guidelines for accountability is essential to address this challenge. Organizations should implement comprehensive risk assessments and create policies that define the roles and responsibilities of all stakeholders involved in AI development and deployment. This clarity can help ensure that ethical standards are upheld and that individuals are held accountable for their actions.

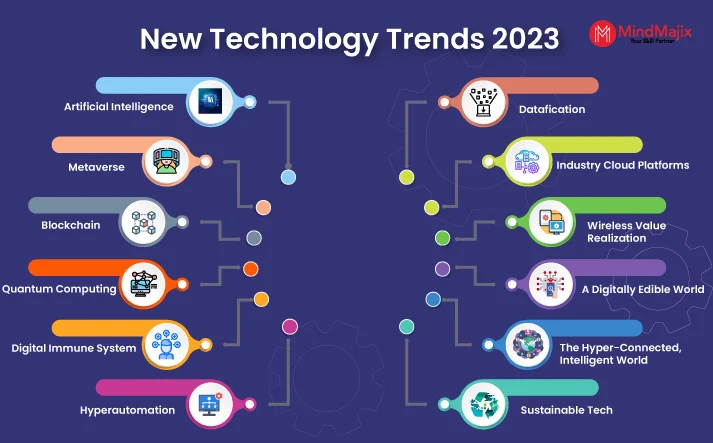

Job Displacement and Economic Impact

The rise of AI technologies has raised concerns about job displacement and the economic impact on the workforce. As automation becomes more prevalent, many traditional jobs may become obsolete, leading to significant economic disruption and social inequality. This challenge necessitates a reevaluation of workforce development and education systems to prepare individuals for the changing job landscape.

To address these concerns, policymakers and organizations must collaborate to create reskilling and upskilling programs that equip workers with the skills needed for emerging roles in an AI-driven economy. Emphasizing lifelong learning and adaptability will be crucial in mitigating the negative effects of job displacement while harnessing the benefits of AI technologies.

Ethical Use of AI in Surveillance

The use of AI in surveillance raises profound ethical questions regarding civil liberties and human rights. Governments and organizations increasingly employ AI technologies for monitoring and surveillance purposes, which can lead to invasive practices that infringe on individual privacy and freedom. The potential for misuse of surveillance data poses significant risks to society.

To navigate this ethical challenge, it is essential to establish clear regulations governing the use of AI in surveillance. Transparency in surveillance practices and the implementation of oversight mechanisms can help protect citizens’ rights while ensuring that AI technologies are used responsibly and ethically.

AI in Decision-Making Processes

AI systems are increasingly being used in decision-making processes across various sectors, including healthcare, finance, and criminal justice. While AI can enhance efficiency and accuracy, reliance on automated systems raises ethical concerns about the potential for dehumanization and lack of empathy in critical decisions. The challenge lies in balancing the benefits of AI with the need for human oversight and ethical considerations.

To address this issue, organizations should implement hybrid decision-making models that combine AI capabilities with human judgment. This approach can help ensure that ethical considerations are integrated into decision-making processes, preserving the human element in critical areas where empathy and understanding are essential.

The Future of Autonomous Weapons

The development of autonomous weapons powered by AI presents a significant ethical challenge in the realm of warfare and conflict. The potential for machines to make life-and-death decisions raises profound moral questions about accountability, the value of human life, and the implications of delegating lethal force to algorithms. This challenge necessitates a critical examination of the ethical implications of AI in military applications.

International regulations and treaties may be necessary to govern the use of autonomous weapons and ensure that ethical standards are upheld in warfare. Engaging in global discussions about the implications of AI in military contexts can help establish norms and guidelines that prioritize human rights and ethical considerations in conflict situations.

The Digital Divide and Access to AI Technologies

The digital divide refers to the gap between individuals who have access to digital technologies and those who do not. As AI technologies become more prevalent, this divide poses an ethical challenge, as marginalized communities may be left behind in the AI revolution. Ensuring equitable access to AI technologies is essential for fostering inclusivity and preventing further social inequality.

To bridge the digital divide, stakeholders must invest in infrastructure, education, and training programs that promote access to AI technologies for all individuals. By prioritizing inclusivity and equitable access, society can harness the full potential of AI while ensuring that its benefits are shared broadly across diverse communities.

This HTML document provides a comprehensive overview of the ethical challenges of artificial intelligence, with each subheading addressing a specific issue in detail. The content is structured to be informative and engaging, suitable for readers interested in the ethical implications of AI technologies. Sure! Below is an HTML table summarizing the ethical challenges of artificial intelligence (AI) in an informative manner.

| Challenge | Description |

|---|---|

| Bias and Discrimination | AI systems can perpetuate or even exacerbate existing biases in data, leading to unfair treatment of individuals based on race, gender, or other characteristics. |

| Privacy Concerns | The use of AI in data collection and surveillance raises significant privacy issues, as individuals may not be aware of how their data is being used. |

| Accountability | Determining who is responsible for the actions of AI systems can be challenging, especially in cases of errors or harm caused by autonomous systems. |

| Job Displacement | Automation through AI can lead to significant job losses in various sectors, raising concerns about economic inequality and the future of work. |

| Security Risks | AI technologies can be exploited for malicious purposes, such as creating deepfakes or conducting cyberattacks, posing risks to individuals and society. |

| Transparency | Many AI systems operate as “black boxes,” making it difficult to understand how decisions are made, which can undermine trust and accountability. |

| Ethical Use of AI | There are ongoing debates about the ethical implications of using AI in sensitive areas such as healthcare, law enforcement, and military applications. |

You can copy and paste this code into an HTML file and open it in a web browser to view the table summarizing the ethical challenges of artificial intelligence.